Company Name:

Incubation Round:

Application Track:

Challenge Name:

Product Description

Datavillage allows each digitized consumer to create and control their own ‘digital twins’ made via the combination of their personal behavioural data. Through their self-controlled ‘digital twins’, consumers can decide, by giving their consent, which behavior can or not be extracted from their digital twin. Those behaviors are then shared and integrated into organisations digital experiences.

Organisations create deeper and inter-connected experiences by ‘questioning’ the digital twin with the consent of the consumer. They get answers and answers only to their questions thus ensuring the right level of privacy and control for their consumers. The Datavillage platform is composed of 3 products: a privacy preserving low-code data processing studio allows the organization to test, deploy and run their algorithms, a personal data passport to integrate Digital Twin within the different channels of organisations, and finally a personal data cockpit for allowing the consumers to control their digital twins by giving them the ability to manage access to their behavioral data, to monitor by who and how it is used.

To enable data interoperability between the personal data of any data provider and the broadcaster’s multimedia objects (VRT), we use schema.org as the base ontology.

In schema.org, we mainly use action entities related to user actions and creativeWorks entities to describe media objects. When necessary (for example, when working with musical multimedia objects, we use musicontology as a complement to the description of musical entities.

We use also open linked data cloud (like DBPedia) as a way to categorise media objects in categories, types, …

For consent management, we use the Gconsent ontology to formalize the consent given by the end user to the broadcaster.

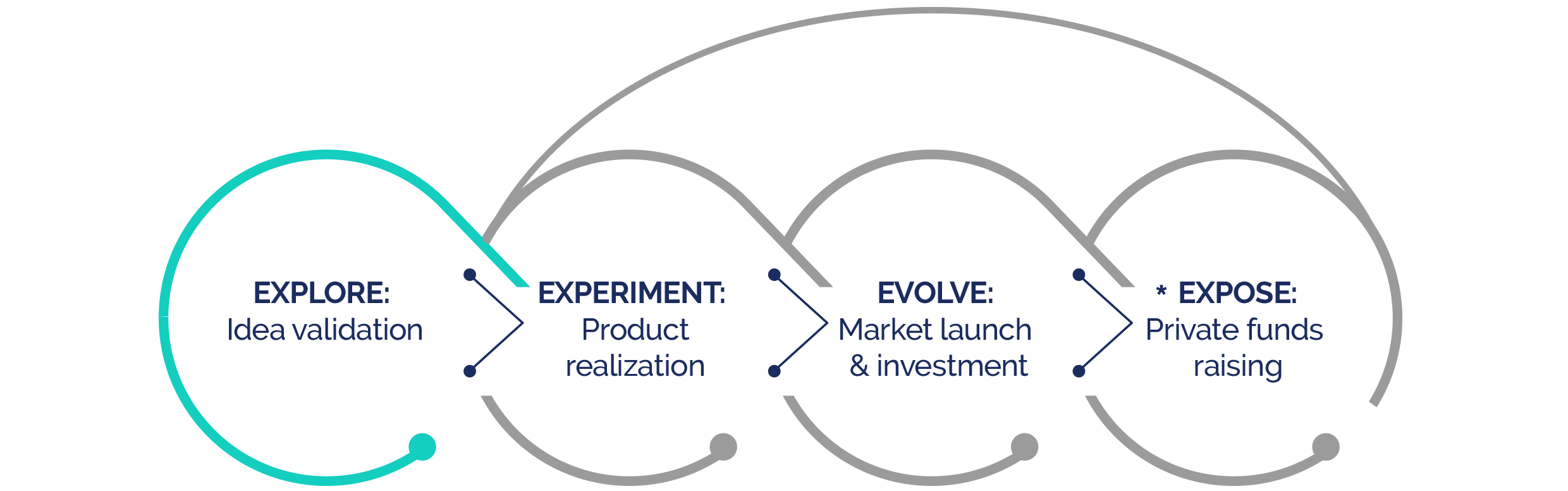

*Expose phase is open to all Experiment phase teams

Location:

Sector:

Website:

Company maturity:

Investment level:

Funding raised:

Collaboration opportunity:

Company Description

We are involved in Solid and gradually participate in the standardization of the description of data in the Personal Data Store of end users.

Quentin Felice

Co-founder – business focus. Tech entrepreneur and corporate intrapreneur with more than 10 years experience in launching and scaling tech startups and managing corporate venturing.

Frederic Lebeau

Co-founder – product focus. Technology veteran with more than 20 years experiences in cybersecurity, digital transformation, enterprise architecture and IT innovation.

Philippe Duschesne

Tech lead. Open and linked data specialist along with full-stack developer who participated in several projects for spin-offs, scaleups but also international governments.

Loic Quertenmont

Loic Quertenemont, data science lead. Loic has years of experience leading projects that apply data science and mathematical modeling to solve complex problems. For about ten years, he used to analyze the gigantic 50Pb of data produced yearly by the CERN LHC in view of finding evidence of new physics.