Data Value Chains:

from Definition to Realization

Context

In February 2020, the European Commission announced the European Strategy for Data, aiming at creating a single market for data to be shared and exchanged across sectors efficiently and securely within the EU. Behind this endeavour stands the Commission’s goal to get ahead with the European data economy in a way that fits European values of self-determination, privacy, transparency, security, and fair competition. This is especially important as the European data economy continues to grow rapidly – from 301 billion euros (2,4 % of GDP) in 2018 to an estimated 829 billion euros (5,8 % of GDP) by 2025.

The centrepiece of the European Data Strategy is the concept of “data spaces”. A Data Space is defined as a decentralized infrastructure for trustworthy data sharing and exchange in data ecosystems based on commonly agreed principles. To realize Data Spaces, a European ‘soft infrastructure’ is needed, specifying legal, operational and functional agreements as well as technical standards for being widely adopted by users. A data space consists of a set of agreements on legal, technical, functional and operational aspects as specified by the general authorisation framework. Based on this framework, actors providing and/or consuming data, as well as software vendors, can implement their own solutions.

On the other hand, the European Commission has been fostering both “Big Data Innovation Hubs” and “Supporting the emergence of data markets and the data economy”, to ensure that the mentioned ‘soft infrastructure’ and actual ‘hard infrastructure’ are made available. Big Data Innovation Hubs aim to break “data silos” and stimulate sharing, re-using and trading of data assets by launching a second-generation data-driven innovation hub, federating data sources and fostering collaborative initiatives with relevant digital innovation hubs. This shall promote new business opportunities notably for SMEs as part of the Common European Data Space.

This is indeed what the REACH project is doing, refining EDI earlier incubator, by better addressing the challenges of data sharing, governance and reuse across multi-stakeholder actors in data value chains. For that, REACH is going beyond EDI, by not only connecting data corporates with innovators, but also engaging with DIHs and envisioning to develop data value-chains and connect these with innovators to create new value-added services (e.g. services to end-users but also optimising connection/ relations among entities within the same value chains).

Data Value Chains (DVCs): definition

To better understand what a Data Value Chain (DVC) is and is useful for, let’s first comprehend where this term derives from. A Value Chain is a set of interlinked resources and processes that begins with the acquisition of raw materials and extends to the delivery of valuable products. A value chain is a set of activities that a firm operating in a specific industry performs in order to deliver a valuable product (i.e., good and/or service) to the end customer. The notion of VC was developed by Porter in the 1980s.

On the other hand, a Data Value Chain (DVC) is a mechanism that defines a set of repeatable processes to extract data’s value step by step throughout its entire lifecycle from raw data to veritable insights. DVC consists of four main steps:

- Data generation: Capture and record data;

- Data collection: Collect, validate and store data;

- Data analysis: Process and analyze data to generate new potential insights;

- Data exchange: Expose the data outputs to use, whether internally or externally with partners.

Big Data is a logical consequence of the importance that digital technology and services have taken in our lives where the data is multiplying at an unprecedented rate. Hence, Big Data Value Chain (BDVC), a derivative of Data Value Chains (DVCs) which allows to extract hidden reliable insights while relying on the strengths of Big Data to process huge volumes of high velocity, ample variety and (sometimes dubious) veracity data. It generally consists of up to five distinct phases:

- Data acquisition: Data acquisition refers to the process of obtaining raw data;

- Data pre-processing: Data pre-processing involves a validation process, cleaning, reduction and data integration to prepare storage;

- Data storage: Data storage includes not only storage but also the management of large-scale datasets;

- Data analysis: Data analysis uses analytical methods or tools to model, inspect and mine data to extract value;

- Data visualization: Data visualization is a method of assisting data analysis. It is a meaningful representation of complex data to show hidden patterns.

In REACH, we use the term DVC for simplicity although we are essentially referring to BDVC, since the main aim of the project is to address industrial challenges by promoting sustainable digital solutions applying Big Data and data analytics. Consequently, within REACH, a Data Value Chains (DVCs) can be defined as a multi-stakeholder data-driven business model where data is securely exchanged among parties, either persons or organisations, with the aim of creating value for all involved stakeholders. The data lifecycle occurs thus throughout different parties. Data is generated (recording and capturing data), collected (validating and storing it), analysed (processing and analysing the data to generate new insights and knowledge) and exploited (putting the outputs to use, whether internally or by trading them) by different partners. Hence, multi stakeholder heterogenous data needs are correlated to generate insights.

REACH comes along with Edward Curry’s statement that “a well-functioning working data ecosystem must bring together the key stakeholders with a clear benefit for all.” For REACH, DVCs bring together data providers, data end users (potentially the data providers who exploit the solutions driven from their data), solution providers and digital innovation hubs who help in the incubation process triggered by a challenge which is addressed through a data-driven, often multi-stakeholder, data solution.

Data Value Chains (DVCs): realization

Providing support for innovation experiments promoting the development of trusted and secure privacy-aware analytics solutions allowing for the secure sharing of proprietary industrial data along with personal data is impending. Besides, such support must ensure compliance with relevant legislation (such as data protection legislation). This is indeed what the REACH project aims to do through its REACH Toolbox, which provides an enabling set of digital trust enabling services that facilitate new developments of Data Value Chains.

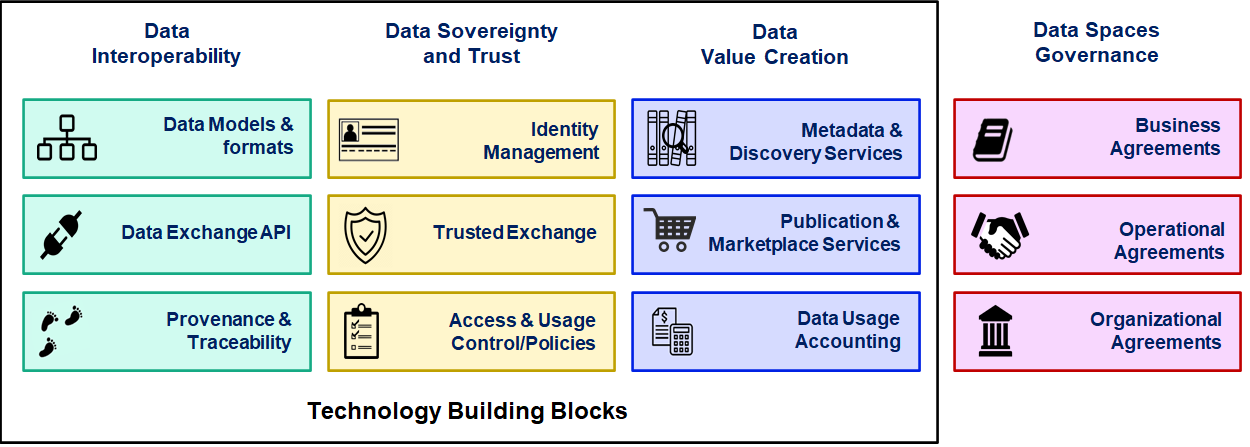

However, these tools do not cover the whole lifecycle of multi-stakeholder data analytics, as outlined above, and we deem that these tools should be combined with and complemented by the emerging Open Source building blocks of European Data Spaces. Indeed, the design and implementation of a data space comprises a number of building blocks, which fall under two types: the technical building blocks and the governance building blocks.

From a technical perspective, a data space can be understood as a collection of technical components facilitating a dynamic, secure and seamless flow of data/information between parties and domains. These components can be implemented in many different ways and deployed on different runtime frameworks (e.g., Kubernetes).

Governance building blocks are artefacts that regulate the business relationships between the groups of stakeholders that can be identified in data-driven business ecosystems: Data owners, Data provider, Data processor and data marketplace operator.

The first phase for establishing data spaces is about converging current European initiatives (e.g. IDSA, Data Sharing Coalition, MyData, BDVA, IHAN, FIWARE or Gaia-X) in order to co-create a single result, which will be well accepted for adoption by a critical mass of stakeholders. This is essentially the mission of the newly formed Data Spaces Business Alliance.

Nowadays, there are mainly two driving forces who are pushing the generation of the building blocks that assemble a Data Space, namely IDS and FIWARE. Next, a brief summary of their current complementary offer is given. REACH deems that companies taking part in its incubation program should not only consider using the tools provided within REACH Toolbox but should also have a look to the assets brought forward by IDS and FIWARE.

IDS building blocks for Data Spaces

The International Data Spaces Association (IDSA) is a consortium formed by companies, scientists, lawmakers and all other relevant stakeholders, with the aim of creating a technical standard to boost the data economy worldwide. IDSA has defined the International Data Space concept, a space in which data is shared in a trustworthy and secure manner. For that, partners belonging to IDSA have developed different components.

The main component is the IDS Connector. The IDS Connector is the central technical component for secure and trusted data exchange. The connector sends your data directly to the recipient from your device or database in a trusted, certified data space, so the original data provider always maintains control over the data and sets the conditions for its use. The connector uses technology that puts your data inside a sort of virtual “container,” which ensures that it is used only as agreed upon per the terms set by the parties involved.

IDS connectors can publish the description of their data endpoints at an IDS meta-data broker. The IDS meta-data broker is a catalogue in which, using an standardized vocabulary, a data provider can promote its data, including the access restrictions, pricing and so on applied to the data. This allows potential data consumers to look up available data sources and data in terms of content, structure quality, actuality and other attributes .

As mentioned before, data sources can be described using different vocabularies (ontologies, reference data modes or metadata elements). The vocabulary provider offers and manages different vocabularies belonging to different domains, to annotate data sources.

The IDS Connector allows establishing a set of rules and restrictions, i.e., a set of governance rules, to decide who is able to access the data source, when, under what conditions, and so on. The IDS clearing house component provides decentralized and auditable traceability of all transactions if needed. In addition, this intermediary provides clearing and settlement services for all financial and data-exchange transactions within the IDS.

In order to build a Data Value Chain and increase the value of the data sources, IDS provides the App store component. App stores provide data apps (DVCs in REACH jargon) which could be deployed in IDS connectors to execute tasks like transformation, aggregation or analytics on the data.

Last, Identity Providers offer a range of services to create, maintain, manage and validate identity information of and for IDS participants and components.

Those components aim to cover the core aspects of the data management described by the European Data Spaces initiative. The IDS Connector is directly related to data governance and data sovereignty, as it allows an organization to share its data sources without losing its control, i.e., knowing who, when and how is accessing its data. In the same way, the IDS clearing house provides decentralized traceability of all data transactions performed. Regarding data brokerage, IDS offers the meta-data broker and the vocabulary provider, the first one enabling a system to publish the metadata about a data source such as the connection details, pricing (if any) and so on, and the second one providing a set of predefined vocabularies to annotate the offered data, fostering the interoperability. Last, the IDS app stores could provide different data-enrichment apps which could be useful in order to perform data analytics and anonymization tasks over the offered data.

FIWARE building blocks (i4Trust) for Data Spaces

FIWARE Foundation is a non-profit organisation that drives the definition and encourages the adoption of open standards to ease the development of portable and interoperable smart solutions in a faster, easier and affordable way, avoiding vendor lock-in scenarios. FIWARE brings a curated framework of open source software platform components which can be assembled together and with other third-party components to build platforms.

The FIWARE software architecture gravitates around management of a Digital Twin data representation: An entity which digitally represents a real-world physical asset (e.g. a bus in a city, a milling machine in a factory) or a concept (e.g., a weather forecast, a product order).

FIWARE provides components to materialize the different technical Building Blocks required for the creation of Data Spaces. Regarding the data interoperability, two elements are critical: a) an API to get access to the data and b) the data models describing the attributes and semantics associated with the different types of Digital Twins being considered. NGSI-v2 API a simple yet powerful RESTful API standardized by ETSI for getting access to context/ Digital Twin data. It is used as the data integration API and is implemented by the core component of the FIWARE architecture: the so-called Context Broker component. It enables to manage context information in a highly decentralized and large-scale manner. NGSI-v2 brings very simple and therefore easy to use operations for creating, updating and consuming context / Digital Twin data but also more powerful operations like sophisticated queries, including geo-queries, or the subscription to get notified on changes of Digital Twin entities.

Also, the FIWARE’s Smart Data Models initiative provides a library of Data Models described in JSON/JSON-LD format which are compatible respectively with the NGSI-v2 APIs or would be useful for defining other RESTful interfaces for accessing Digital Twin data. Data models published under the initiative are compatible with schema.org and comply with other existing de-facto sectoral standards when they exist.

Completing the picture of Building Blocks for Data Interoperability, FIWARE brings components which provide the means for tracing and tracking in the process of data provision and data consumption/use. It provides the basis for a number of important functions, from identification of the provenance of data to audit-proof logging of NGSI-v2 transactions. For those Data Spaces with strong requirements on transparency and certification, FIWARE brings components (i.e., Canis Major) that ease recording of transaction logs into different Distributed Ledgers / Blockchains.

Regarding data value creation building blocks, FIWARE Business Application Ecosystem (BAE) components enable creation of Marketplace services which participants in Data Spaces can rely on for publishing their offerings around data assets they own. FIWARE also comprises components for publication of data resources linked to data assets around which offerings are managed through the FIWARE Data Marketplace. For this purpose, they have the Idra publication platform and an extended version of the CKAN open data platform, which is an open data publication platform widely adopted in the market. These extensions support enhanced data management capabilities and integration with FIWARE technologies. CKAN is not limited to list data resources linked to static files as part of its catalogue but also data resources linked to NGSI-v2 requests served by Context Broker components deployed in a Data Space. This brings the ability to discover data resources relying on DCAT capabilities for publication platforms support.